The success of a policy in solving the intended problem or remediating the target abuse heavily depends on how accurately the policy gets enforced. Given that most of the platforms handle a large volume of content, often in millions per month globally, policy enforcement needs to be consistent and scalable. Operations teams bridge this gap between a rule on paper (i.e., the policy) and a rule in practice. The following are the primary steps to operationalize a policy so that it’s ready for enforcement by content moderators:

- Translating the policy into clear “enforcement guidelines,” also known as “operational guidelines”;

- Developing the appropriate review interface;

- Training content moderators.

| Note: Certain factors such as developing the appropriate review interface or training may not be relevant in all situations. For example, if the use case is to operationalize an update/a minor change to an already existing policy, a review interface for the broader policy enforcement might already exist. Similarly, since reviewers already know the high level concept of the policy, a minor policy update could potentially be communicated in writing without having to provide a full fledged training. |

Translating Policy Into Enforcement Guidelines

While the policy outlines what is allowed on the platform versus what is not, to enforce it appropriately reviewers would also have to know the following, which is captured in enforcement guidelines:

- How to identify a policy violation;

- Supporting information to arrive at the appropriate decision;

- Which enforcement action to take.

How to Identify A Policy Violation

A piece of content can include multiple elements and multiple types of information, often with varying weights of importance. Take the example of a video of poppy flowers. This video may include multiple elements such as the visual of poppy flowers, accompanying audio, a title or a caption describing the video, name of the person or entity uploading the video, or the video’s thumbnail. Similarly a photo may also include a title or a caption, overlay text, or the name of the person or entity uploading it. Content moderation will involve reviewing all these individual elements to arrive at a final decision, and enforcement guidelines should provide the guidance on “how” to do the same.

Returning to the example of the video of poppy flowers, consider the various possibilities of how the video could be posted:

- Video of poppy flowers with no audio and no caption;

- Video of poppy flowers, but the audio includes language to bully someone;

- Video of poppy flowers with relaxing instrumental music, but its title is “How to make your own opium without getting arrested”;

- Video of poppy flowers with relaxing instrumental music, titled “My flower garden” but the name of the uploader is “Natural Opium 4 U”;

- Video of poppy flowers with relaxing instrumental music, titled “My flower garden” but there’s pornography in the video at the 42 minute mark;

- Video of poppy flowers with no audio and no caption, but comments on the video include hate speech.

Enforcement guidelines aim to help the content moderator make a decision considering various possibilities such as the video example shared above. In this case, enforcement guidelines would clearly outline whether the reviewer should consider title, audio, caption etc., how much weight should be given to each element, and which combination of violating elements will make the overall content a violation. Guidelines may also include the order of operations, so that reviewers know which element to review first.

Supporting Information to Arrive at the Appropriate Decision

Additionally, enforcement guidelines can also provide supporting information to aid the decision making process. Some examples include:

- A hierarchy within a policy, if the policy has various tiers and severities of violations. For example, if the content contains multiple bullying violations, a hierarchy will help the moderator decide which sub-violation has the most weight and should be prioritized. A hierarchy is critical for:

- Accurate enforcement data: Having enforcement data which accurately represents the distribution and the volume of policy violations is important for platforms to prioritize future investments to reduce and prevent abuse.. In some cases, enforcement actions may also be used as training data for machine learning classifiers, so decisions need to be accurate at a granular/sub-policy level. This may not always be a priority depending on the goal of the classifier and precision targets.

- User experience: This hierarchy will not make much of a difference to the user if all violations have the same enforcement action. However, if for example, one section of bullying policy violations lead to removal of the content versus another section of policy violations that result only in content demotion, it’s key from a user experience standpoint to select the correct sub-violation within bullying.

- Lists of keywords or key terms that can indicate violations, such as a slur list in case of hate speech, or a list of known entities or organizations engaged in policy violating behavior.

- Market specific terms or guidance: Since Community Standards are often global, in some cases, supporting regional guidance or language specific guidance is required for accurate enforcement. For example, the policy around inciting violence might require additional guidance specifically for a region where there’s ongoing conflict. Or a word which has no humorous meaning in English may have a humorous meaning in a different language, say Portuguese; in this case, Portuguese reviewers would need guidance on whether they could consider the said word as humor in all scenarios.

Which Enforcement Action to Take

Once content moderators know how to identify a policy violation, they need guidance on which enforcement action to take in which scenario. Below are a few examples on how such guidance could be structured for content.

- Bullying and harassment: If a video includes a bullying policy violation AND the caption is promoting the policy violation, then DELETE the video.

- Hate speech: If a video includes a policy violation AND the caption is condemning the video, then ALLOW the video.

- Nudity/Child Safety: If a photo includes nudity AND it belongs to a minor, then DELETE the photo and ESCALATE for further investigation on child safety.

Note: Depending on the scale of content moderation, guidance could also be provided on how to identify a minor to ensure consistency in enforcement (e.g., look at the age in bio, check for certain features in the photo).

| Media (e.g., photo, video) | Caption | Impacted User | Action |

|---|---|---|---|

| Includes bullying as defined by policy | Promotes the violation | Adult | Delete |

| Includes hate speech as defined by policy | Condemns the violation | Adult | Allow |

| Includes nudity as defined by policy | No caption | Adult | Delete |

| Includes nudity as defined by policy | No caption | Minor | Delete and escalate for further investigation of child safety |

| Account | Content Posted in the Past X Days | Description / About Section | Title | Profile / Cover Photo | Action |

|---|---|---|---|---|---|

| User profile | > 30% violates policy | None | N/A | No violation | Disable profile |

| User profile | < 30% violates policy | None | N/A | No violation | Allow |

| Business entity | < 30% violates policy | Violates policy | No violation | Violates policy | Remove entity |

| Business entity | No policy violations | Violates policy | Violates policy | Violates policy | Remove entity |

Please note that the examples included above are for representation only and platforms’ thresholds and account level policies may vary.

| Note: In some cases, deciding on which enforcement action to take could be automated, with reviewers only making a decision on what kind of policy violation exists in the content. More on that in the section about decision trees below. |

Developing Appropriate Review Interfaces

A reviewer’s success in accurately determining a policy violation also depends on the interface in which they are moderating the content. Answering the following questions can help identify the appropriate interface for content moderation.

- Do reviewers have access to all the information to enforce as intended?

- How can we drive efficiency and support reviewer resilience while displaying information?

Do reviewers need access to all the information to enforce as intended?

Apart from the content itself, below are the common types of information required for decision making on policy violations.

Account Attributes

This includes basic information about the owner and the reporter of the content, such as the profile and cover photos, name, minor status, number of followers and following, verified status, country, etc. Although there could be many such account and content level attributes that can inform the review process, it’s important to critically evaluate and prioritize the must have information vs. the good to have, because more information than necessary can overwhelm the reviewer or increase the average review time, and negatively impact enforcement accuracy and operational cost.

Decision Trees

A decision tree is a way of structuring enforcement actions in a tree-like model, visually displaying decisions and in some cases their potential outcomes. Decision trees can be single-step or can include multiple “branches” which content moderators evaluate to select the best courses of action. In majority of the cases each step or branch in the decision tree includes policy categories so content moderators can select the appropriate policy violation.

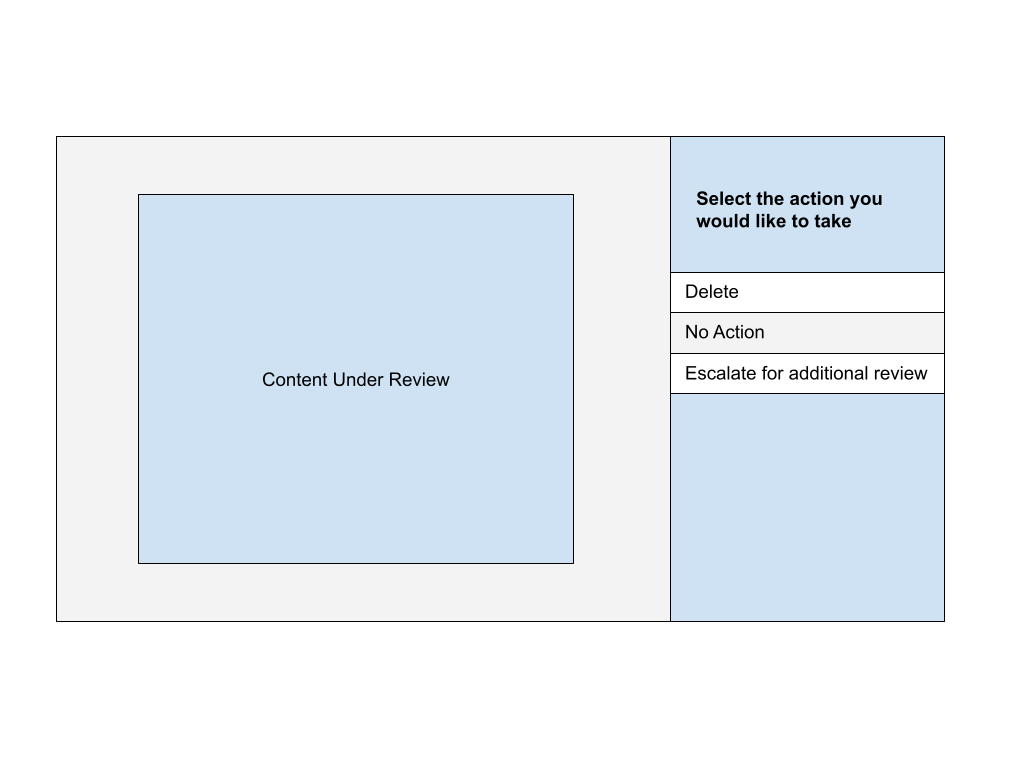

Below is a simplified example of an interface using which content moderators review the content and select one of the options from the decision tree. A decision tree can be single step or can have multiple steps/branches. (See Figures 1 and 2 below for an example a single step decision tree and Figure 3 for an example of a multi-step decision tree.)

Additionally, decision trees could be built to either take auto-decisions based on the features of the content or require reviewers to manually take an enforcement decision.

- Decision first: In a ‘decision first’ tree, reviewers’ first step is to select the appropriate enforcement action (e.g., delete, no action, disable) against a piece of content. A decision first tree can have lesser review time because reviewers are arriving at the final decision at the outset. However, this option can pose a risk of low precision especially for policies that are nuanced and complex in nature.

- Information first: In an ‘information first’ tree reviewers only label the characteristics of the content and a decision is automatically taken based on the combination of characteristics. For example, a reviewer could use the labeling tree to label that a content includes a claim about a false cure for COVID-19 or that it includes harmful speech against a protected characteristic. In this case, the reviewer is not taking a decision on which enforcement action should be taken but merely identifying what is happening in the content. Depending on the granularity of the tree, this option can drive high precision in enforcement. However, more granularity also increases time taken to review a piece of content, translating into high operational cost.

How can we drive efficiency in the interface and support reviewer resilience?

Features for Efficiency

Below are some common features to drive efficiency and support reviewer resilience in how the information is displayed.

- Option to blur photos. Since content can often include graphic imagery, this option will allow content moderators to view or blur the photo as needed during the review process.

- Keyword highlighter so that content moderators can easily identify violating content, especially when they have to review content with 100s of words.

- Showing all the thumbnails of the video so they can skip to the potentially violating part, especially if it’s a long video.

- Showing video transcripts with optional English translation, so moderators can skip to the potentially violating part of the audio.

- External links’ preview, name, and caption, so moderators don’t have to click and investigate external URLs. This is especially useful to control average review time.

- Ability to search policies. Content moderators often have to remember all of Community Standards (i.e., dozens of policies), and each of them could have multiple sections and subsections. Ability for a keyword based policy search within the review interface can help them quickly refer to the relevant policy while reviewing tricky examples.

- Ability to translate content to English, or to reroute it to the appropriate language reviewer.

- Option to turn images into black and white. This aims to alternate the reality of sensitive images reviewed (e.g., for graphic content with gore and violence).

- Option to change the audio frequency. This measure is also intended to minimize the transposition of moderators to their reality.

- Ability to change playback speed of videos. This is especially useful when reviewers have to investigate long duration videos, or even to slow down at a part of the video where there could be a likely violation.

Training Content Moderators

Once guidelines and review interfaces have been finalized and before fully launching a policy change, content moderators need to be trained on the change. While the training material usually includes policy language, enforcement guidelines, examples, and visuals of the review interface (in case of a new workflow), the “how” of training can vary. Depending on the capacity model and the size of the change, platforms commonly use one of the following methods for training.

- Direct training: Providing direct training may be feasible when moderation is done by employees or inhouse contractors, as platforms have that direct contact.

- Train the trainer: This method is mostly used when working with external vendors on large scale workflows. Because platforms cannot directly train thousands of vendor employees, they train a selected set of trainers within the vendor organization, who will then train their employees over a predefined duration.

- E-learning: E-learning is a preferred method, especially if the training function in an organization is well established to create interactive e-learnings within the desired turnaround. Additionally, e-learning is preferred if the size of a new policy or a policy change is small, i.e. if it’s generally understood to be not too complex or nuanced that it can be absorbed without needing interaction with a trainer or a policy expert.

- Communication only: Another option in case of small sized policy or process changes is to send detailed communication to content moderators along with the training material to enable self learning.

- Practice tests: These can be used both internally and for vendors. Practice tests usually include a set of mock examples where moderators will have to make an enforcement decision.