Content Moderation

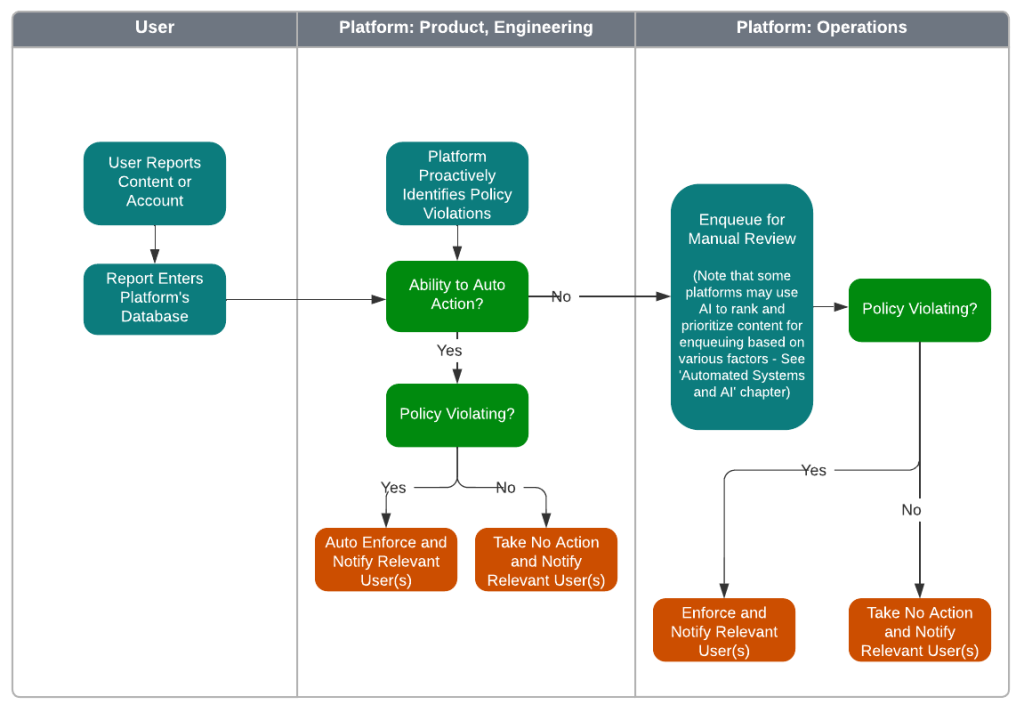

Content moderation is the process of reviewing online user-generated content for compliance against a digital platform’s policies regarding what is and what is not allowed to be shared on their platform. These policies are often known as community standards. The process of moderating content and enforcing policy is either done manually by people or through automation, or a combination of both, depending on the scale and maturity of the abuse and of a platform’s operations.

Content moderation is managed by the operations function in trust and safety, in close collaboration with engineering, product and policy functions. Operations teams are responsible for establishing the appropriate staffing and workforce model for content moderation and for building sustainable processes to monitor and control the health of the content moderation system. Policy is responsible for creating rules that determine the types of content allowed on a platform versus what is not and should be enforced through the content moderation system (see Creating and Enforcing Policy). Product and engineering teams are responsible for supporting the content moderation process by building the required tools and infrastructure, both for manual enforcement by content moderators and to build automation to scale enforcement, and also for designing and building the end-to-end user experience in the context of content moderation (see Automated Systems and Artificial Intelligence). The three functions of product, policy and operations also collaborate to develop the medium and long term strategy for trust and safety on their platform.

The way trust and safety teams are structured to own the three functions varies; however, the collaboration between them is crucial for building and maintaining a robust content moderation system. For example, Company A could have a single team focusing on policy and operations, whereas Company B may structure their trust and safety organization to keep these functions under two different teams.

Scope of Moderation

In practice, content moderation can mean moderating individual content and/or actors and their behavior on the platform. Individual content is user-generated content (UGC), which includes media, posts, or comments shared by users on a platform. Actors are users or entities who are responsible for sharing content or for policy-violating behavior. Behavior includes policy-violating behavior which may become evident over a period of time and over the course of multiple actions (e.g., sending objectionable messages to multiple users, artificially boosting the popularity of content, or engaging in behaviors designed to enable other violations).

Results of Moderation

Any one or more of the following actions could be the outcome of content moderation: content deletion; banning; temporary suspension; feature blocking; reducing visibility; labeling; demonetization; withholding payments; referral to law enforcement. Read more about enforcement actions in Creating and Enforcing Policy.

Why Is Content Moderation Important?

Most platforms began content moderation as a means to comply with legal obligations and to ensure user safety by moderating illegal and abusive content; however, there are various reasons why digital platforms increasingly invest in content moderation, both to address content flagged by their users and to proactively minimize content or behavior that does not comply with Community Standards. Note that while the points below summarize the common rationale for content moderation, the investment itself has compounded in the last decade with the proliferation of UGC on digital platforms (see Industry Overview).

- Ensuring safety and privacy. Removing content that could contribute to a risk of harm to the physical security of persons and protect personal privacy and information.

- Supporting free expression. Moderation and safety enables expression and gives people a voice. If people don’t feel safe, they won’t feel empowered to express themselves.

- Generating trust, revenue, and growth. Additionally, content moderation is also a required business investment to ensure the desired traffic and growth to the platform. For example, since many platforms’ business models rely on subscription services or advertisers (or both), to attract and retain both user base and revenue, platforms invest in content moderation as it directly influences user trust and brand reputation, which in turn influences growth and revenue.

News Article: The biggest companies no longer advertising on Facebook due to the platform’s lack of hate-speech moderation

News Article: Here are the biggest brands that have pulled their advertising from YouTube over extremist videos