Defining Harm

There is no one size fits all definition of harm: harms can range in type, severity, longevity, and scale, and can vary depending on the nature and usage of the given product or platform. However, frameworks can provide a useful starting point in thinking about how to define and identify harm. One such framework is Microsoft’s Harms Model, in which the risk of injury, denial of opportunities, infringement of rights, and erosion of social and democratic structures are the dimensions of harm. Another approach is the Trust & Safety Professional Association’s (TSPA) taxonomy of Abuse Types, which classifies the illegal or problematic behaviors and content types most commonly seen on online platforms; this includes violent or criminal behavior, offensive and objectionable content, deceptive and fraudulent behavior, etc. Researchers and academics across industries have also provided other lenses to conceptualize harm and inform strategies to prevent or mitigate harm that can be adapted to the needs of different organizations (1, 2, 3).

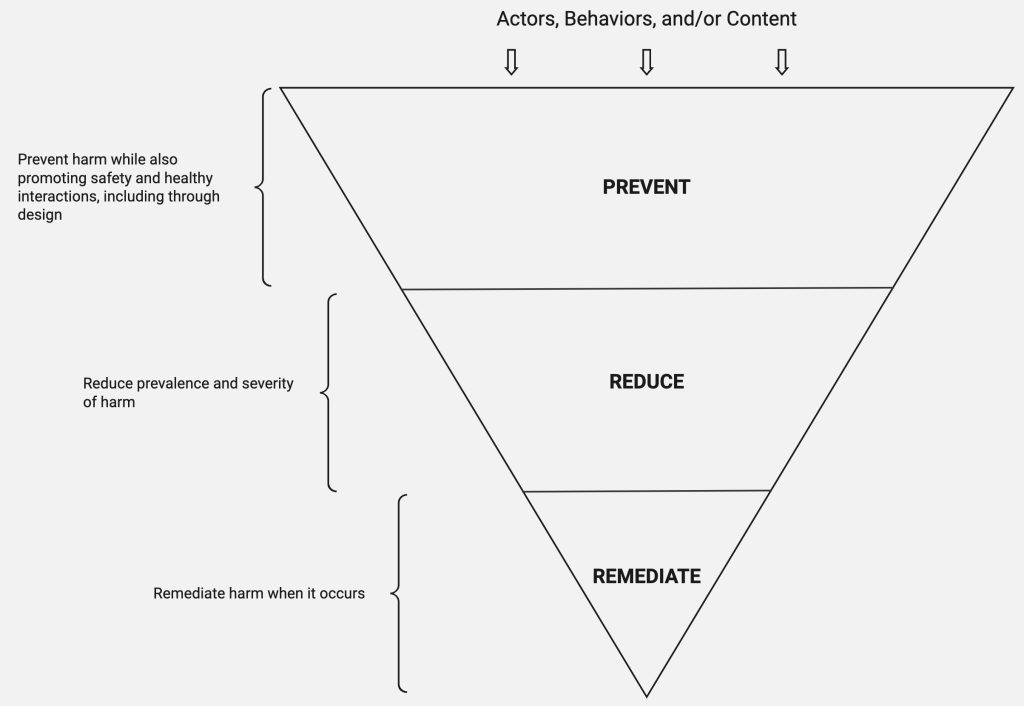

The goal of Safety by Design is not to prevent all potential harms to end-users that could arise from use of the platform or service, but to:

- Build systems that promote safety and well-being;

- Reduce and prevent harm (including by enabling users to surface harm, and offering them meaningful control towards preventing it);

- Remediate harm by providing recourse when it occurs.

Content moderation—reviewing content after its creation—has been the dominant solution adopted by technology companies. However, attention is now shifting to methods of harm prevention and promotion of safety, which are more upstream in the technology development lifecycle and often the focus of SbD efforts.

To do this, it is important to recognize that the approach should not only apply to product and service development processes, but extend into the design of organizations, their business processes and their cultures. It is also worth noting that while safety is usually enhanced by privacy, security, accessibility, and user empowerment, there may sometimes be tensions between safety and privacy (for example, long-running policy debates around end-to-end encryption, which protect privacy but undermine safety to the extent that they provide cover for high-severity illegal activity like child harm) and safety and free speech (hate speech and incitement to violence). Platforms should be thoughtful about the balance they strike amongst these values, having regard for business objectives, regulatory requirements, and user, community, and societal well-being.

The Benefits of Safety by Design

Safety by Design enables companies to better anticipate and address potential negative impacts to users, their communities, and society from the products they release. In addition, it also delivers important business benefits.

- Maintain / build user trust: A safety-conscious culture can deliver safer and more accountable user experiences, which in turn can drive user trust, engagement, and long-term growth. Trust plays varying roles in the business strategies of different platforms, but can be particularly critical for online marketplaces and platforms that facilitate online and offline interactions (for example, dating platforms and ride-sharing apps).

- Protect / enhance brand reputation: Safety may be central to a platform’s mission or brand promise. For instance, safety or harm prevention is often a core stated value for social media platforms, alongside other stated values such as free speech, authenticity, and privacy. Safety by Design approaches breathe life into platforms’ corporate commitments to user well-being.

- Boost revenue and cut costs: Safety by Design can contribute to ensuring stability in revenue growth. By incorporating SbD, platforms are safer, which increases user retention, improves brand reputation, and reduces the cost of incident response. Platforms driven by subscription or marketplace models typically experience more direct revenue and reputational impacts from inattention to safety, though platforms driven by advertising models are also susceptible to risks of advertiser flight. For example, see the Global Alliance for Responsible Media (GARM) standards on brand risk and advertisement suitability.

- Regulatory compliance: Safety by Design is emerging as a core regulatory requirement. The idea of SbD is encoded into platform regulation like the Digital Services Act (‘DSA’), the UK’s Online Safety Act (‘OSA’), Australia’s Online Safety Act, Basic Online Safety Expectations, and Singapore’s Online Safety Act. These laws—including other online safety regulations from across the world—require platforms to fulfill due diligence obligations, which include demonstrating the existence of robust systems such as reporting and complaints processes, interface design requirements, transparency obligations and more—all to prevent and remediate harm to users. Laws like the DSA, the UK’s OSA and the Irish Online Safety and Media Regulation Act (OSMR) require platforms to conduct routine risk assessments and build mitigation protocols, which are at the heart of Safety by Design. Auditing and accountability requirements in the European Commission’s AI Act, the DSA and draft legislation like the Algorithmic Transparency Act in the US, reflect the SbD emphasis on designing and integrating safety in all stages of a product’s lifecycle. Safety by Design offers a practical baseline for compliance and enables platforms to scale efficiently, without the mounting costs of post-facto response. Overall, platforms will need to make significant business decisions around implementing the right SbD approach given each platform’s unique design, business model and user base, and regulatory obligations.

Principles of Safety by Design

The following principles are meant to serve as guideposts for organizations as they implement a Safety by Design program. They are inspired by Privacy by Design principles initially proposed by a Canadian privacy regulator, the World Economic Forum’s Global Principles on Digital Safety, and Australia’s eSafety Commissioner’s Safety by Design principles. The Digital Trust & Safety Partnership (DTSP) also provides excellent resources on best practices for digital trust & safety and the SAFE framework for safety risk assessments.

Holistic: Safety is considered across the product lifecycle

- Product development: Safety should begin at the inception of the product development process, with safety risk assessment measures and controls tracking the stages of the product lifecycle. This should include continuous improvement and increasing maturity, driven by feedback loops with harm measurement and monitoring methods.

- Organizational: Principles of SbD should apply to the entirety of systems, processes and tools used to build user-facing products. This includes organizational design and priorities, team composition, product development processes and decision-making frameworks for all teams, not just T&S teams. This holistic approach creates shared accountability for product safety.

Proactive: Safety efforts should target prevention

Build frameworks and processes to identify, assess and prevent harm from occurring, rather than primarily reacting to safety incidents once they have occurred. Safety risk assessments for new products and features and red teams’ exercises are methods for identifying and addressing potential safety risks upstream. Proactive enforcement methods such as media hashing for child sexual abuse material (CSAM) and non-consensual intimate imagery (NCII) can help prevent users from encountering egregious content.

Empowering: Safety features allow users to control their experience

- User consultation: Designing appropriate safety controls requires meaningful engagement with user communities to empower them with the information and controls to shape their own experiences. Consultation efforts should reflect a platform’s user base, with particular attention to disparately situated and vulnerable groups such as children, differently abled populations, and linguistic and cultural minorities.

- User controls: Safety is complex; people experience safety—or its lack—differently depending on their identity and circumstances. Users are often the best judges of their own safety. User controls and consent forms should be intuitive and widely accessible. Privacy settings, sensitive media settings, and block and mute controls can help users take charge of their own experiences.

Synergistic: Privacy and security practices enhance safety

Investments in privacy and security, such as high default privacy settings and strong security measures, generally protect users by protecting their personal data from misuse, giving them control and visibility over the use of their data, and ensuring its security from unauthorized access. This means ensuring mandatory privacy and security risk reviews and safeguards as well as committing to the use of systems, products and services which adhere to security and privacy by design practices.

Transparency: Safety is enhanced through openness

Transparency in the design of products and the rules that govern them, help users make informed choices about how to interact with products and services and how to seek recourse when harm occurs. Transparency measures such as on-platform user education, ad targeting parameters, explainers on the design of algorithmic tools, and transparency on enforcement measures help give users meaningful insight and choices around their online experiences.

Multi-dimensional: Safety efforts are cross-functional and interdisciplinary

Designing for safety is inherently interdisciplinary (e.g., Research, Engineering, Behavioral Sciences, Frontline Response, Law) and cross-functional (e.g., Design, UX Research, Data Science, Engineering, Policy, Operations, and Legal). It is also enhanced by engaging cross-sectoral perspectives; companies should share best practices and work with civil society and academia to stay aligned with wider sociopolitical trends.